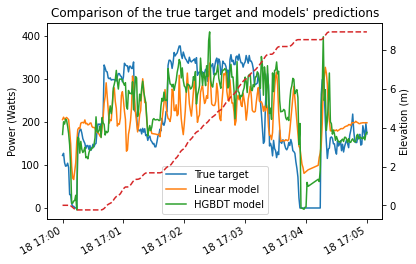

That’s the graph created by your code but I really do not get how you are able to say one model is smoother than the other one. For me the 2 ouputs have the same roughness or even the HistGradientBoost look a bit smoother since it s doing less up and down slopes for most part of the data.