Hi @MarcoDipa ,

the complete citation of the lecture is:

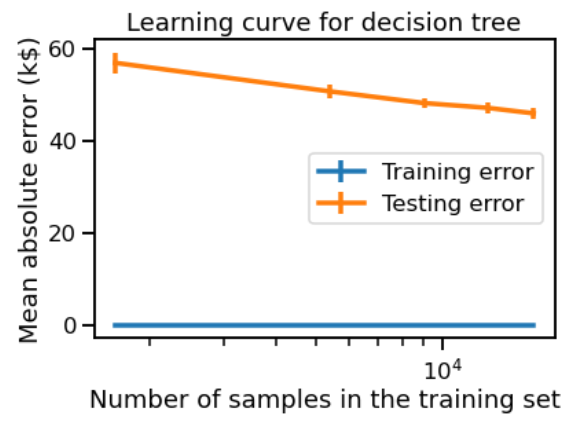

"Looking at the testing error alone, we observe that the more samples are added into the training set, the lower the testing error becomes. Also, we are searching for the plateau of the testing error for which there is no benefit to adding samples anymore or assessing the potential gain of adding more samples into the training set.

If we achieve a plateau and adding new samples in the training set does not reduce the testing error, we might have reach the Bayes error rate using the available model. Using a more complex model might be the only possibility to reduce the testing error further."

So, if I have well understood, the teachers did not say they reached a plateau here but that we could if we had more samples.

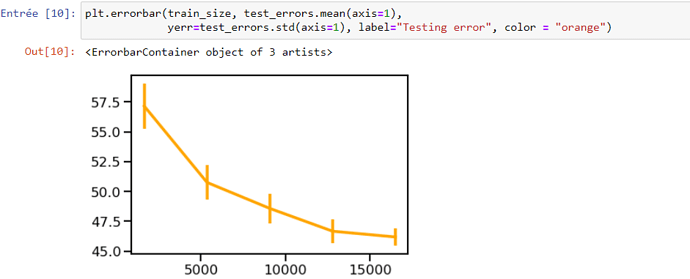

I have the feeling that we are not far of the plateau since if you trace the testing error curve in changing the scale of the y axis you do not improve much the error for the 2 last points: