Hey @metssye,

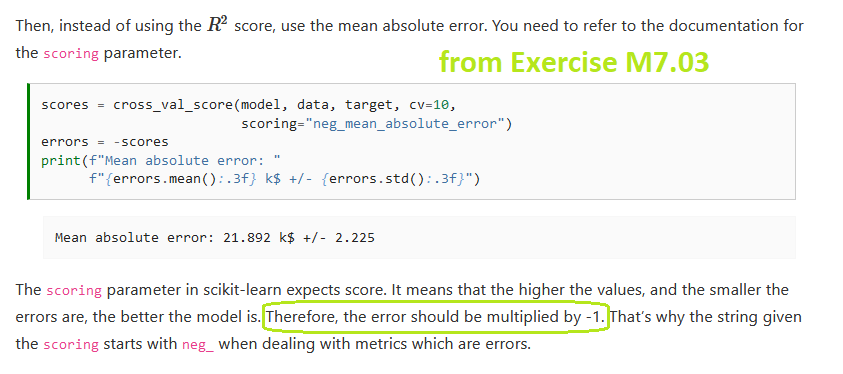

Yes Ur right there is no direct example of using make_scorer in cross_validate also in here, but U can pass a function for scoring in cross_validate too, try this code :

from sklearn.model_selection import cross_validate

from sklearn.metrics import mean_squared_error

from sklearn.metrics import make_scorer

import numpy as np

def my_custom_loss_func(y_true, y_pred):

diff = np.abs(y_true - y_pred).max()

return np.log1p(diff)

here you can use Ur custom loss function or something like mean_squared_error:

my_scorer = make_scorer(my_custom_loss_func,

greater_is_better=False)

cv_results = cross_validate(regressor_model, data, target,

cv=10, scoring=my_scorer,

return_train_score=True,

return_estimator=True)

cv_results[‘test_score’].mean()

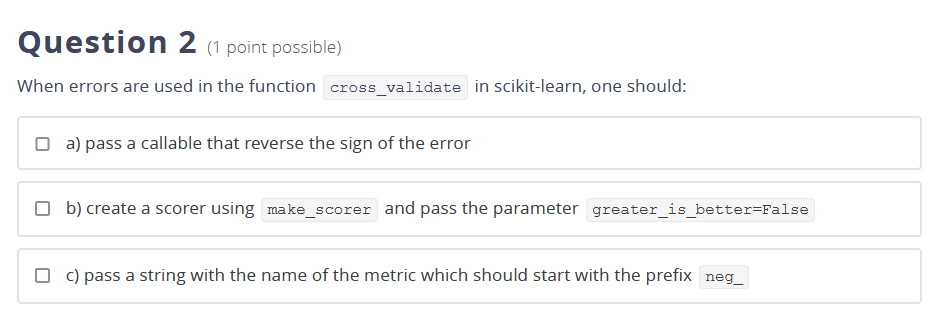

BTW, I’m agree with U, 3rd answer need’s a bit more clarification and as dear @lesteve said, its going to be fixed for the next MOOC.