Hi,

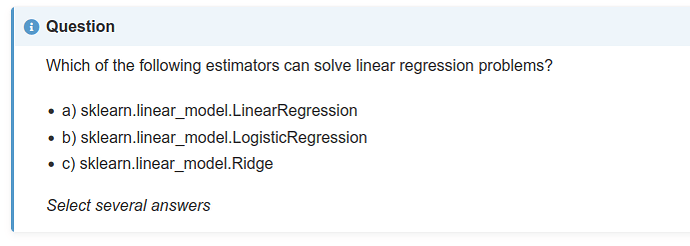

Having to choose Ridge is not really fair, as the description is somewhat cryptic and the strategy has not been presented in the video. Difficult from the description to understand if it’s a classification, a regression, or something else…

Linear least squares with l2 regularization.

Minimizes the objective function:

||y - Xw||^2_2 + alpha * ||w||^2_2

This model solves a regression model where the loss function is the linear least squares function and regularization is given by the l2-norm. Also known as Ridge Regression or Tikhonov regularization. This estimator has built-in support for multi-variate regression (i.e., when y is a 2d-array of shape (n_samples, n_targets)).

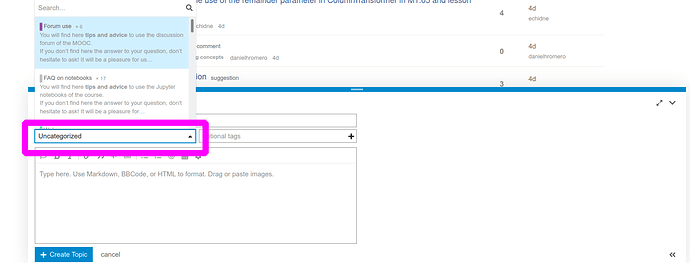

? If you can edit this topic and put it in the right category it would be great! This makes it a lot easier for us to have some context to better understand the question. Any other pieces of information that can help like links to the FUN page do help a lot as well.

? If you can edit this topic and put it in the right category it would be great! This makes it a lot easier for us to have some context to better understand the question. Any other pieces of information that can help like links to the FUN page do help a lot as well.