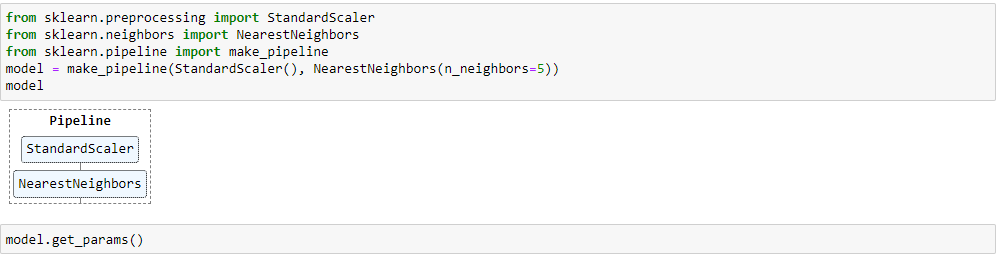

it´s not NearestNeighbors()

the classifier is called somehow different, see Question 4

OK

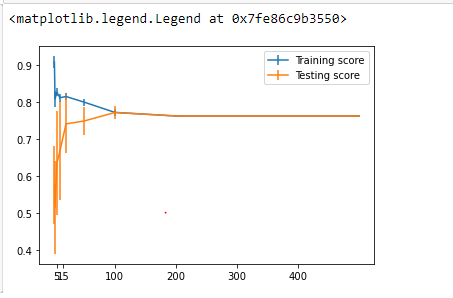

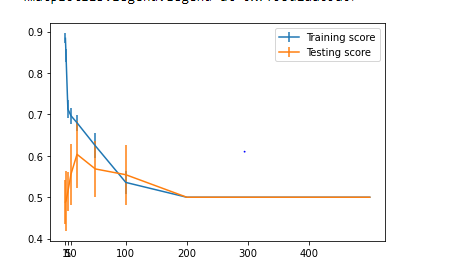

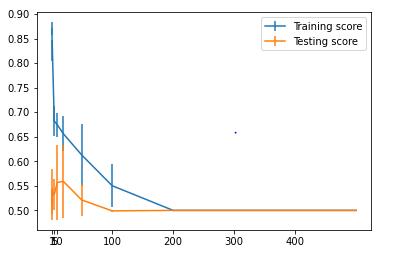

are that following plot true ?

mine looked a bit different

that’s one?

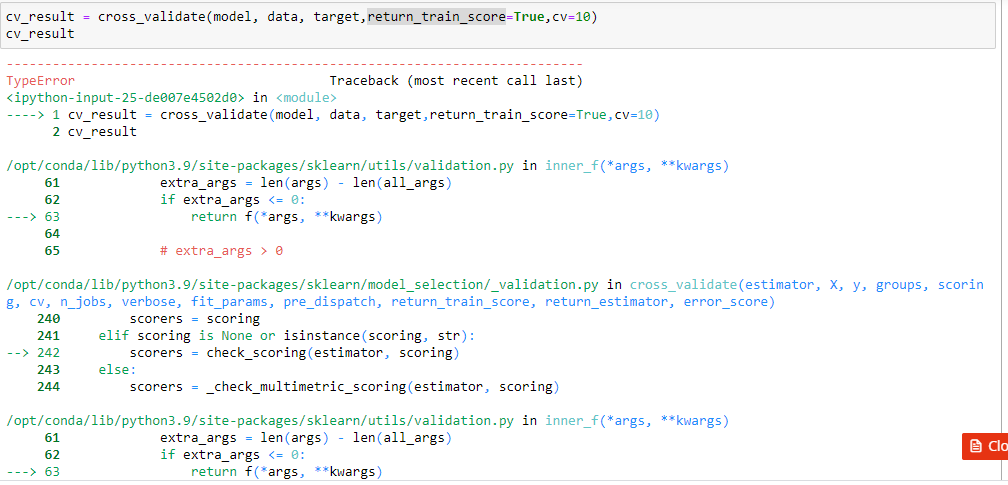

the balance accuracy term have significant effect on score ,why?

also why i should define the cv param, it should be Kfold with 5 splits by default , but if i didn’t define the cv the plot will change!?

Balanced accuracy is a measure accounting for having an imbalanced ratio between classes. The accuracy does not account for it. In a highly imbalanced problem, the accuracy can be high just by always predicting the majority class. The balanced accuracy will take into account this issue.

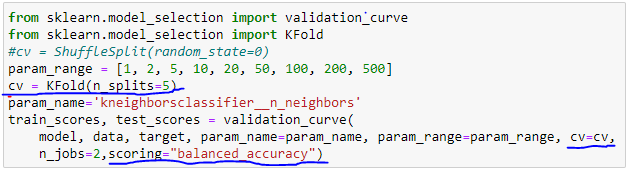

The subtility here is that in classification it will be a stratified 5-fold cross-validation and in regression a 5-fold cross-validation. You need to change KFold by StratifiedKFold to get the same results than passion cv=5.

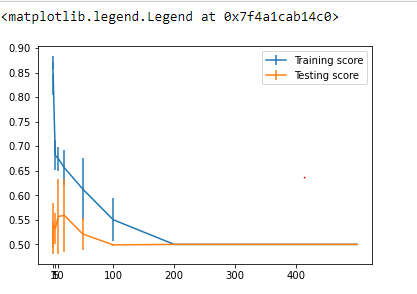

(1)with cv=5 or None

(2)with cv=KFold(n_splits=5)

could you pleas clarify which one is right (1) or (2) and why ?

(1) and (2) are both correct. However, this is better to use a stratified approach in classification to ensure that we still get the same class distribution in the train and test sets of the cross-validation. So (1) is more “appropriate”.

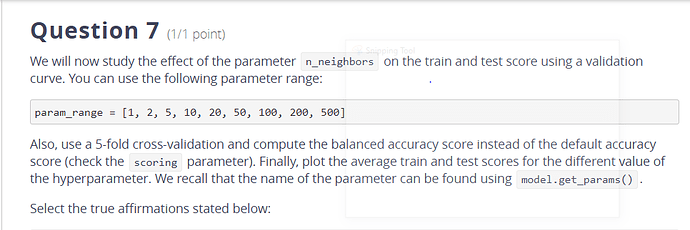

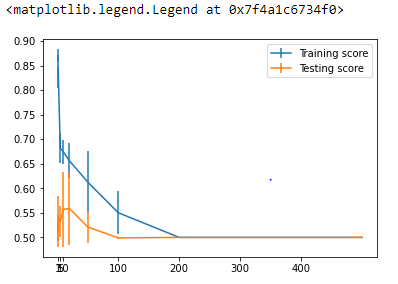

Regarding the exercise itself, be aware that we asked for 10 splits and not 5. So you can just use cv=10. Also, I find that the number of parameters of n_neighbors is very large. I don’t think that we asked question where n_neighbors is expected to be bigger than 100?

you can as well use plt.xscale("log") to better see the difference of score for low number of neighbors on your plot.

Oh right. I was looking at the question of Module 3. Sorry for the misinformation.