I find the description of the performance with the KNN and 10-fold cross-validation (question 6) a tad ambiguous.

It is true that taking the difference of the test and train score means, the conclusion is: the model is overfitting. This is the metric which is proposed in the subsequent questions, but it is not yet introduced at this point.

However if one instead checks how the score is evolving after each fold (that was my first idea), one finds that the model is, in practice, improving fold after fold. In that sense the final performance after 10 folds is not so bad with a significantly reduced difference between training and testing scores.

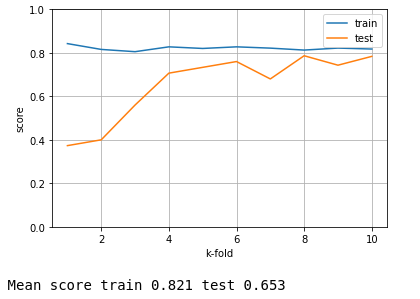

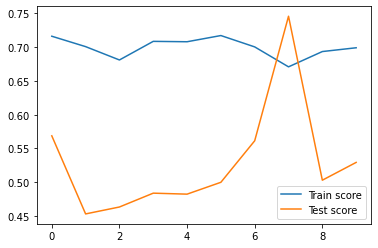

See the example below, as I got in this exercise.