By definition, a score means a higher value is better. For instance, the accuracy score shows this behaviour. The minimum accuracy is 0 and the maximum (perfect classification) is 1.

On the contrary, an error means a lower value is better. For instance, the mean absolute error has a minimum of 0 which means a perfect regression with not error. The maximum can be infinity in this case.

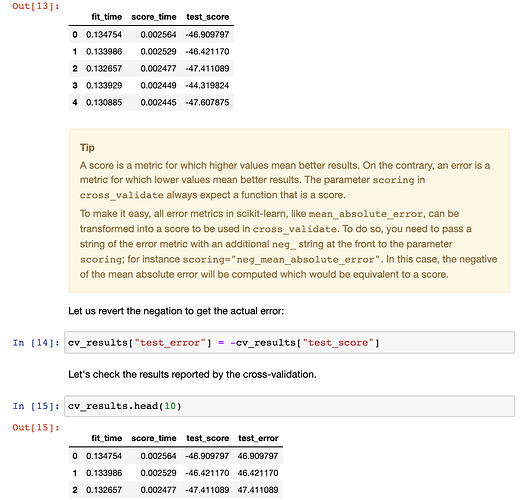

So in scikit-learn, cross_validate was designed to only consider a score (higher value means better) and not an error. Thus, a trick to transform an error into a score is to make it negative. If we take the mean absolute error: -np.inf will be the worse possible negative error and the best negative error will be 0.

Yes

Note:

Just to answer the potential question as to why scikit-learn uses negative error. You will see that in a GridSearchCV, one wants to pick up the best score or the lowest error. Thus, when one has a score, grid-search will maximize this score while with an error, one wants to minimize this error. The developers of scikit-learn decided to only accept scores such that the grid-search only rely on the score and only maximizing this score. Thus, errors are transformed into scores by multiplying -1.