Hello, i suppose i have a problem with the categorical data, but:

-

how can i debug it ? what data are involved ?

-

i think i have a problem with the replacement of missing values but i dont know where ?

Thank you for help

My code is here:

# -------------Processing of categorical data - Traitement des données de catégories

from sklearn.compose import make_column_selector as selector

categorical_columns_selector = selector(dtype_include=object)

categorical_columns = categorical_columns_selector(data)

data_categorical = data[categorical_columns]

#remplacement valeurs manquantes

imp_freq = SimpleImputer(strategy="most_frequent")

imp_freq.fit(data_categorical)

# Replace missing values in data - remplacement dans le tableau initial des colonnes de catégories avec les données manquantes

data[categorical_columns] = imp_freq.transform(data_categorical)

# ----------- Processing of numerical data - Traitement des données numériques

numerical_features = [

"LotFrontage", "LotArea", "MasVnrArea", "BsmtFinSF1", "BsmtFinSF2",

"BsmtUnfSF", "TotalBsmtSF", "1stFlrSF", "2ndFlrSF", "LowQualFinSF",

"GrLivArea", "BedroomAbvGr", "KitchenAbvGr", "TotRmsAbvGrd", "Fireplaces",

"GarageCars", "GarageArea", "WoodDeckSF", "OpenPorchSF", "EnclosedPorch",

"3SsnPorch", "ScreenPorch", "PoolArea", "MiscVal",

]

numerical_preprocessor = StandardScaler()

data_numerical = data[numerical_features]

# building pipeline and cross validation

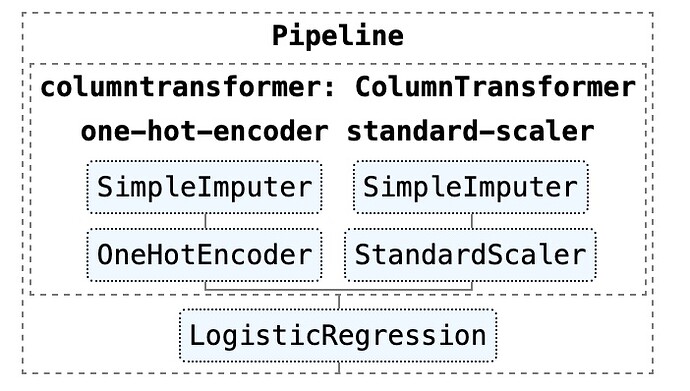

preprocessor = ColumnTransformer([

('one-hot-encoder', categorical_preprocessor, categorical_columns),

('standard-scaler', numerical_preprocessor, numerical_features)])

model_global = make_pipeline(preprocessor, LogisticRegression(max_iter=500))

cv_results = cross_validate(model_global, data, target, cv=5)

print(cv_results)

scores = cv_results["test_score"]

print(f"The mean global cross-validation accuracy is: {scores.mean():.3f} +/- {scores.std():.3f}")