Hi,

the notebook Hyperparameter tuning by randomized-search notes that:

As we have more than 2 paramters in our grid-search, we cannot visualize the results using a heatmap. However, we can us a parallel coordinates plot.

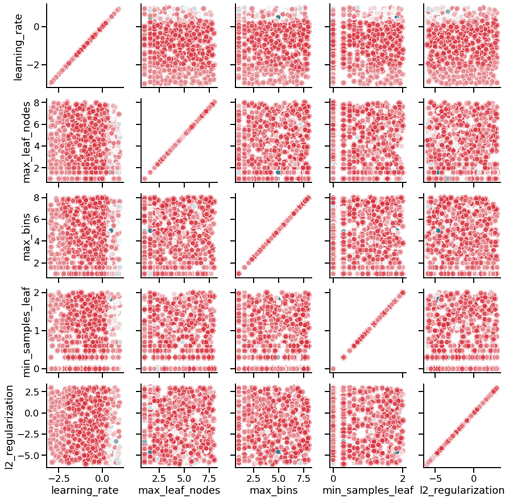

This is true, however, at least for me, the pcplot doesn’t give a clear intuition of the tradeoff among different hyperparameters. If one looks at the range of hyperparameters which result in a mean_test_score of more than, say, 0.85, they seem to be all over the place (except for maybe max_bins which to seems to be restricted to high values, >7 after the np.log2 transformation). I guess the reason is the same why there wasn’t a single pair of best hyperparameters in Hyperparameter tuning by grid-search: we can have more or less the same fit by decreasing the values of certain hyperparameters and increasing the values of some other ones. I think this kind of tradeoff between hyperparameters would be easier to see from pairwise heatmaps, similar to the seaborn.pairplot used in Module 1 but for heatmaps. Is there a way to generate a matrix of heatmaps using matplotlib or seaborn? Thanks!