Hello,

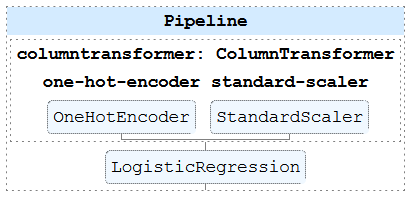

I think I missed something. In the lecture “Using numerical and categorical variables together”, we are using this pipeline:

The OneHotEncoder handling categorical data outputs zeroes and ones, while the StandardScaler handling numerical data outputs values centred around zero. This means that categorical and numerical values are not in the same range.

Why didn’t we use a Normaliser instead for the numerical data? Or put the StandardScaler after the funnel, right before the LogisticRegression?

Maybe that LogisticRegression is not affected by scaling at all, but if that’s the case, why bother with a StandardScaler for numerical data in the first place?

Thanks in advance for your answers  .

.