Hi

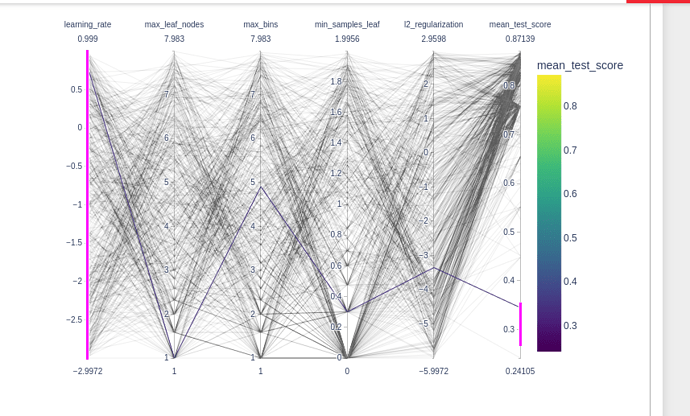

In Question 4, asking Looking plot, which parameter values always cause the model to perform badly?

l could not get it correct answer from grapgh which show in answer.

solution: c) d)

All worst performing models have either a large or low learning rate.

A gradient boosting model with large learning rate will tend to overfit. It is due to the fact that the sequence of added trees will correctly rapidly the residuals and thus will fit noisy samples. Learning rates larger than 1. can even make the optimization problem diverge as will explained in the chapter on ensembles.

Given a fixed budget of boosting iterations (number of trees), setting a low learning will prevent the model to minimize the loss even on the training set and therefore cause underfitting.

Tuning the learning rate is important to adjust for this trade-off in practice.

You can confirm that the learning rate is the most impacting hyper-parameter by instead selecting all the top performing models, that is models with a mean_test_score significantly above 0.8 and observe that they all have learning rate values between 0.01 and 1. (that is -2 and 0 when taking the np.log10

So l try to choose bad fermorm from right side and see for learning rate too large `learning_rate make bad perform. and why too small learning_rate?